Digital + Physical Controls

In 2013, shortly after the Tesla Model S was released, I was given the chance to use one. There were many things that I was very impressed by, and many interesting elements that pushed the industry.

However, one thing in particular really stood out to me – the volume control. They were two digital buttons at the bottom of the screen. They lacked haptic and orientation cues, but were also static. Being digital, they could have at least given more visual cues or taken advantage of being dynamic.

A video made in 2 days to demonstrate the concept

Made using Cinema4D and After Effects

I set out to make a quick concept that married the best of both worlds: a knob that had the benefits of a physical control that overhung a screen to add dynamic feedback when it was interacted with

A video made in 2 days to demonstrate the concept

Made using Cinema4D and After Effects

This video lead to a larger project that was funded to explore this interaction beyond the volume knob.

A few years later, I revisited the above video to play with some new learnings in Cinema4D. Below is a more refined version.

Updated video using Cinema4D and After Effects

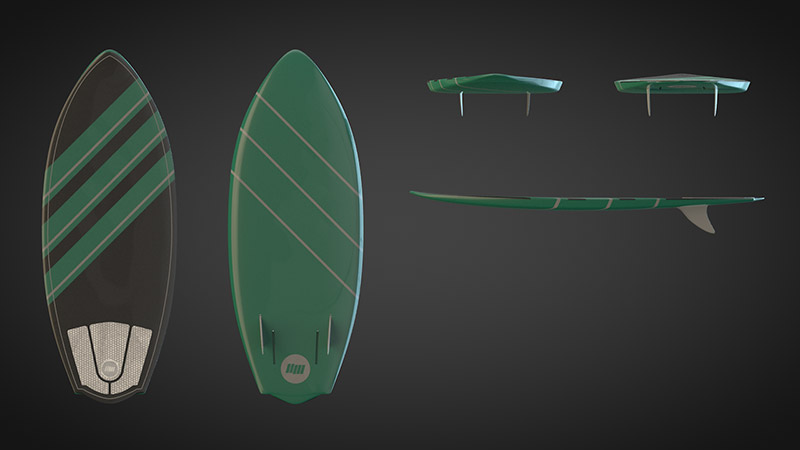

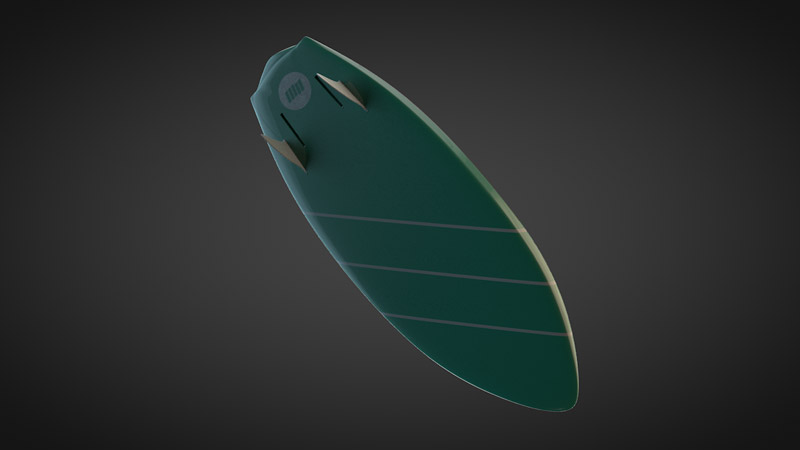

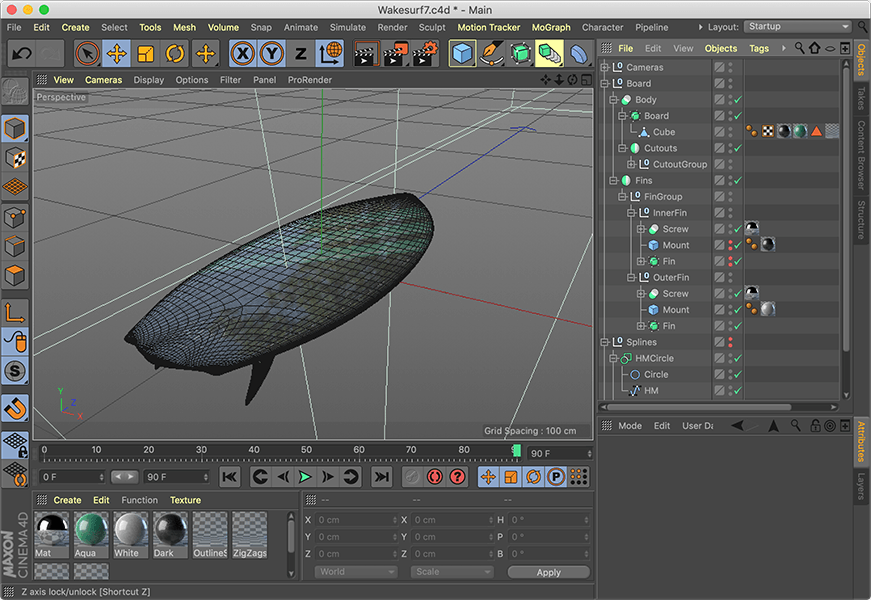

Custom Wakesurf Board

In 2017 I started getting into Wakesurfing. For fun I wanted to challenge myself to design and model my own board.

This was modeled, textured, lit, and animated all within Cinema4D

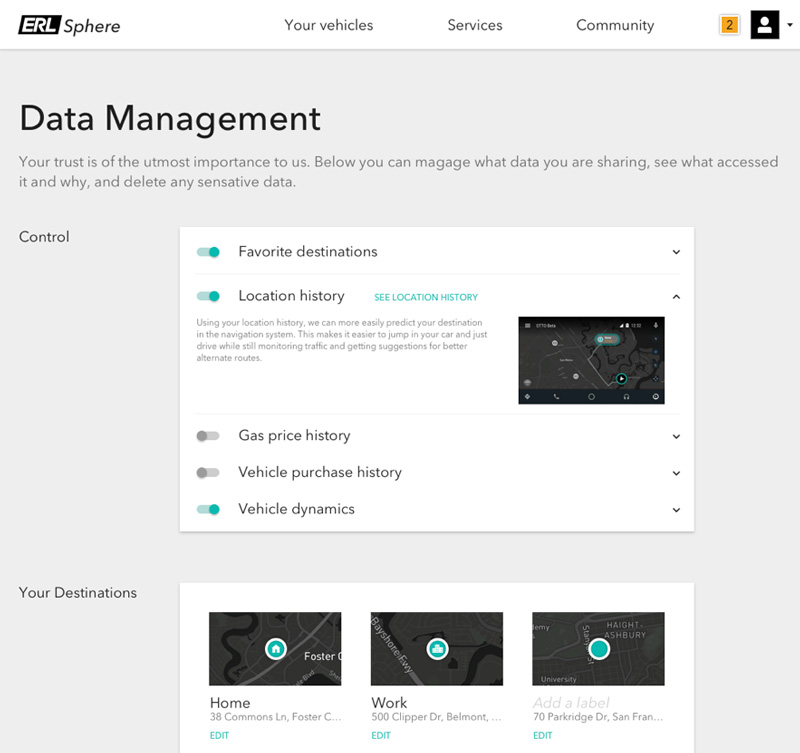

Customer Trust + Blockchain

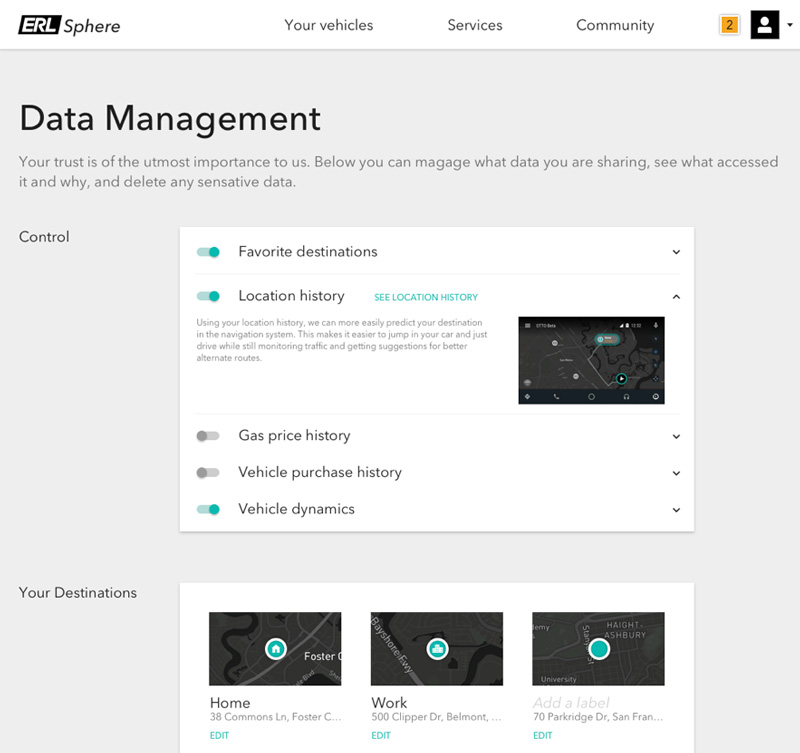

Building a multi-channel digital brand touchpoint created a lot of excited opportunities. They allowed for better integrations throughout the customer journey and allowed for new services to solve pain-points.

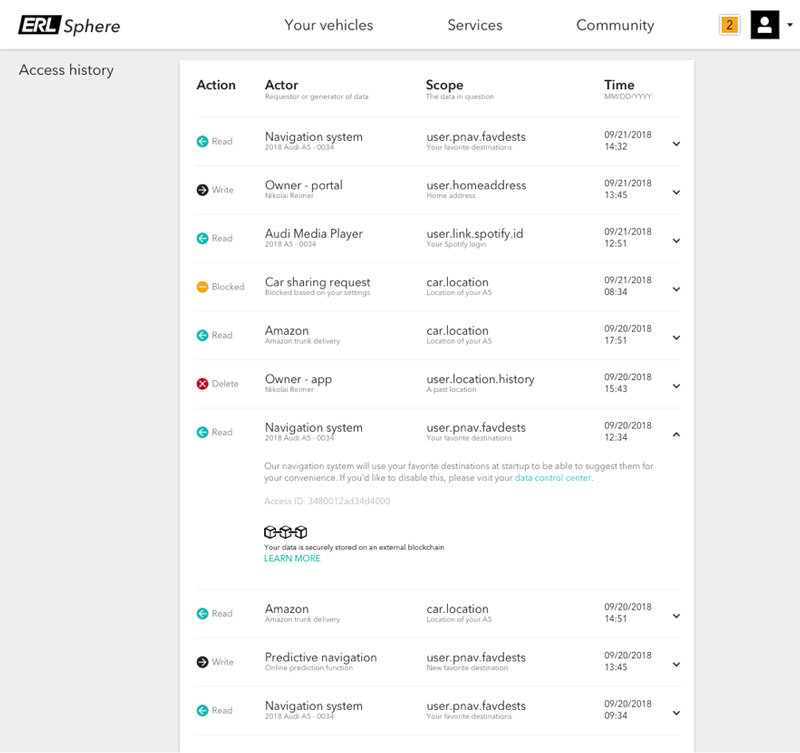

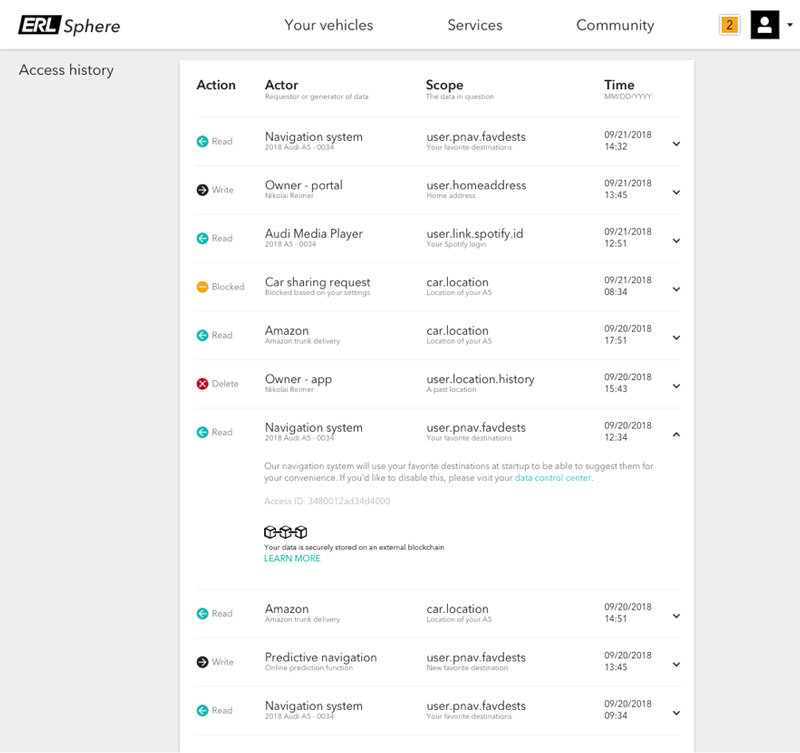

However, one thing that was more important was in gaining and retaining trust of the customer. Very prominently on the portal, we made very explicit what data was being made available and let users take action to control and manage this data at a very granular level.

Data management portal gives transparency to the user

More importantly, we wanted to build trust beyond what we had direct control over. We allowed customers to connect a variety of 3rd party services to their account. This obfuscates who is accessing the pool of data and why.

We wanted to hold everyone accountable to ensure that neither the company, nor 3rd party can have access to customer data without there being a log or a ledger. Using blockchain to ensure this ledger couldn't be tampered with, we made very clear who was accessing which pieces of data and why through API calls.

Using blockchain to ensure transparency and privacy

Mobility Assistant + Android Auto

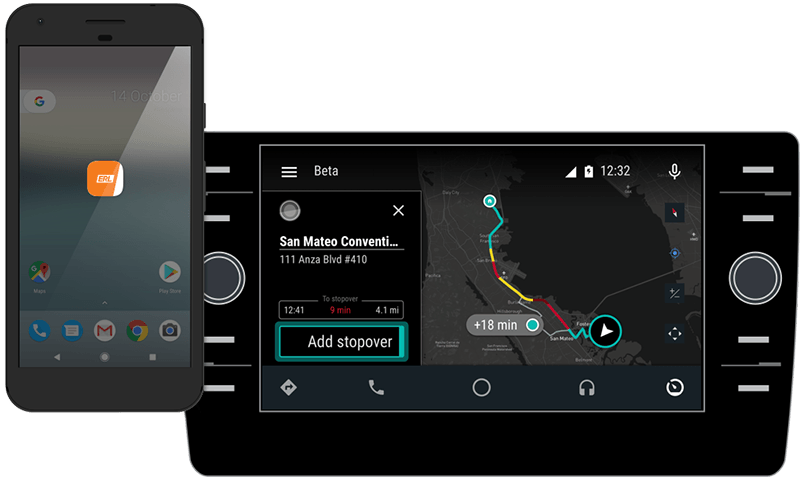

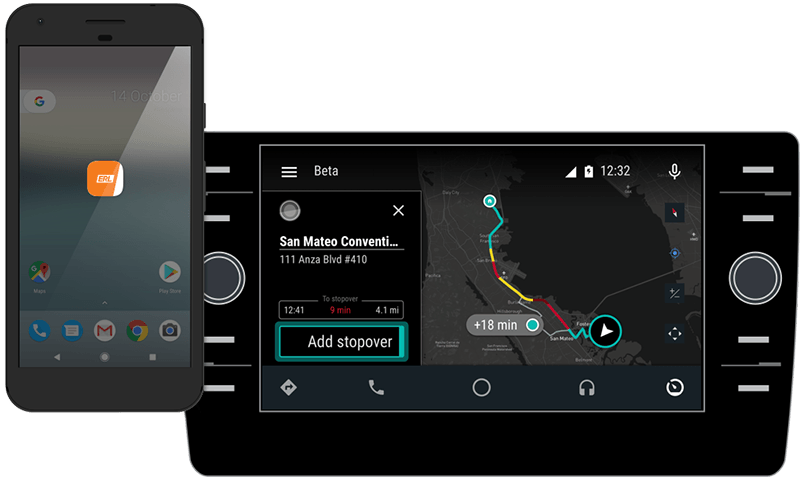

We worked to design an assistant that was focused on mobility. It became obvious that we needed build out the user-base to better collect utterances and infer intents. We needed internal employees to dog-food the product and use it in their everyday life.

The assistant was available on many channels, but the majority of mobility-related use-cases happen while driving. Instead of building out a fleet of vehicles with custom hardware, I pushed for an integration that could be used in any car with AndroidAuto capabilities. The team and I built out a mapping app that allowed users to use our mobility-focused assistant in their every-day lives and commutes.

AndroidAuto projection allowed for many more users

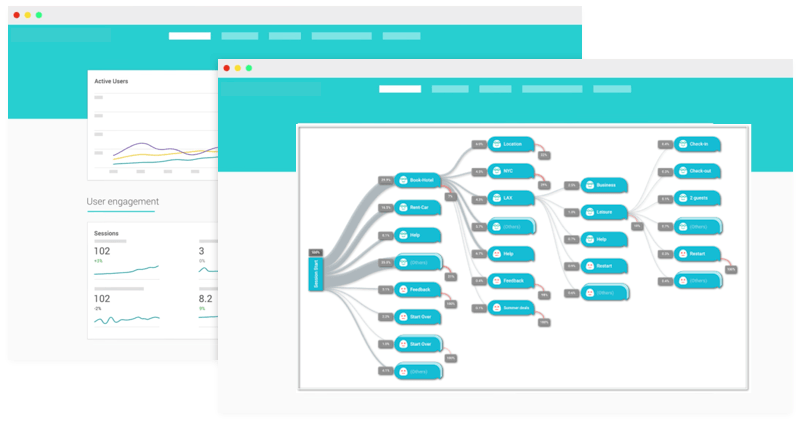

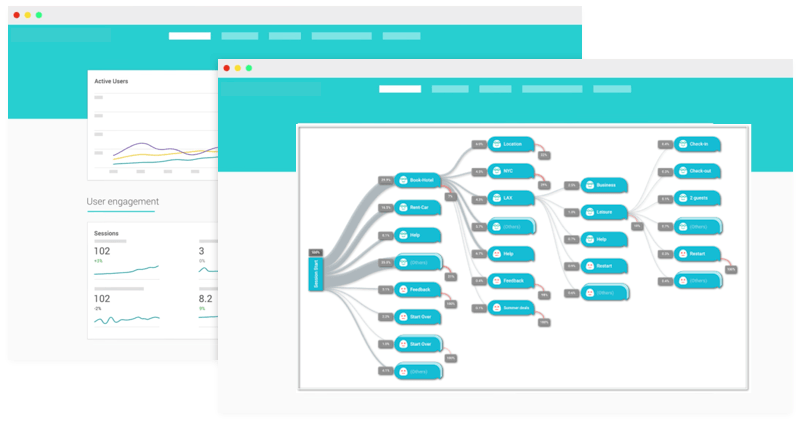

We launched with a hyper-specialized subset of skills and intents. Using Dialogflow, the team was able to see organic requests from our users and I could design new VUI flows and use-cases.

Dialogflow helped us improve the assistant using real-world use-cases

Digital Instrument Cluster

A few weeks leading up to an internal show, it became clear that one of the showcars did not have anything planned for the instrument cluster screen (screen behind the steering wheel).

Given only two weeks, I alone designed a system, matched the existing aesthetic of the other screen, created the assets, and animated a looping video.

Showcar instrument created in Illustrator, After Effects, Cinema4D, and Element 3D

This video was shown in place of a blank screen and helped create a good impression to the VIP round at the showcase.

Concept Car Pre-visualization

In 2012 I was part of a team to help define an internal vision-car for VW's first electric vehicle (EV). During our definition phase there were some characteristics that we really wanted to accentuate: the feeling of lightness, highlight the roominess that EVs bring, and making something novel.

Below are some product pre-vizualizations I did to help communicate to our counterparts in Germany what need to be built.

Instrument cluster visualization

Center screen with embedded touch and LEDs that changed contextually

The acrylic bezel created the illusion of a light display, and showcased the lack of traditional engine and components to highlight the space afforded by the new drive-train.